The Dutch Exploratory Workshop on Testing did her 9th peer conference at the woods of Driebergen with the following test geeks: Jean-Paul Varwijk, Maaike Brinkhof, Philip Hoeben, Joris Meerts, James Thomas, Ruud Cox, Joep Schuurkes, Elizabeth Zagroba, Beren van Daele, Jeroen Schutter, Andrei Contan, Bart Knaack , Jeroen van Seeters, Huib Schoots, Zeger van Heze, Adina Moldovan, Simon ‘Peter’ Schrijver (facilitator) and Ard Kramer(content owner)

The concept of the peer conference is quite simple: based on experience talks we were covering the topic of Testability. The expectation of the content owner is that there would be a variety of opinions about this topic and to give you a spoiler alert; there was!

Off course there are different ways of looking at testability and I like to focus on three angles:

Intrinsic testability: This more focusing on the product.

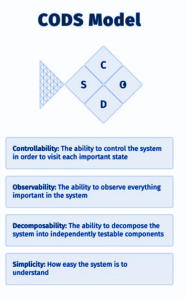

To define intrinsic testability you definitely need to know what is the value or what are the risks that are threatening the product (the context of the product). This will help you to define the testability. The models and mnemonics Robert Meany has presenting to use are very useable. James Thomas mentioned the CODS model.

The observability mentioned in this model is the central word that Maaike used in her experience talk. In her DevOps environment risks have lower impact, which means that software can go faster to production. Also because she saw that software in production provides more interesting and relevant information that running the software in an inferior test environment. So don’t use the word testability anymore, a word non-testers are not attracted to, just use the word observability.

Extrinsic testability. To define as testability not related to the product but everything around the product means that we had stories about the impact of testers. If you really want to improve the intrinsic testability you need to be motivated: to improve your testing skills and how you deal with failures because you chose the wrong level of testability. Are you working in an environment that is safe enough that mistakes are excepted and testability can be improved? Upfront you will never know if the chosen testability will be good enough. So what do you learn about the things you did not know before you started testing.

Different stories were about how non-testers are looking at testability. Ferm statements were made that testability as a concept is hard to discuss with non-testers: they don’t feel the need to do ‘something’ with testability. It becomes interesting if you can get the subject on the table by changing your language or even the approach you use. For example, the language you can use is about speed: if you want to get the product faster to production how can we facilitate this process in the best possible way. You will have a discussion about facilities that we increase your testability.

But there were also stories about mental testability: For example stories were the tester his ethic borders were challenged. This meant that he needs to test systems in organizations that try to make money out of processes that violated ethical and legal borders. As a tester, he was asking himself if that kind of systems are mentally testable?

So should we stop using the word testability and use all kinds of different guerilla tactics to get the subject on the table: don’t mention the word anymore, just do it!

DEWT9 was made possible by the (moral) support of the Associaton for Software Testing: Thank you very much!

References:

James Bach’s Heuristics of Software Testability

Maria Kedemo and Ben Kelly’s Dimensions of Testability

Ash Winter and Rob Meaney’s 10 Ps of Testability

Rob Meaney’s CODS

A Peer Workshop on Testability by DEWT

Testability? Shhh!